Challenges of marketing attribution: Why it’s becoming harder to measure impact

Published on January 27, 2026/Last edited on January 27, 2026/12 min read

Sally Wills

Senior Content Strategy ManagerMarketing teams are all too familiar with this question: What's the ROI? It used to be that any return on investment was easy enough to figure out. Attribution could be traced through channels and marketing efforts without too much trouble. But that's all changed.

Today, there's a widening gap between attribution and an understanding of how customers actually behave. In this article, we'll look at what exactly is changing and why measurement is shifting towards first-party data and experimentation, analytics that focus on customer lifecycle value (CLV), and outcome-driven decisioning with the help of AI. We'll also look at common challenges with traditional attribution models and why understanding the context around the customer journey is key to navigating them.

TL;DR:

- Attribution is less reliable because journeys are fragmented, privacy reduces observable signals, and identity breaks across devices.

- Attribution models still help, but they’re not a complete explanation of “what worked.”

- The practical shift is toward first-party data, journey-level measurement, and experimentation to prove lift.

What is marketing attribution—and why is it breaking down?

Marketing attribution assigns credit to the touchpoints that influence a customer action. It’s how teams track which channels, campaigns, messages, and content contributed to actions like a purchase, signup, or upgrade.

But marketing attribution is breaking down because customer behavior is complex and rarely follows a single, trackable path. In the past, there were fewer channels, so the pathways were less complicated, but a modern customer journey more closely resembles a pinball machine than a funnel—it’s unpredictable, multi-channel, and spread across platforms and time.

Today, someone might read a blog post on their phone, see a product mentioned in a group chat, and later get served a social ad. They click through, browse on their desktop, download the app, and leave. Days later, they may come back via search after an offline conversation, and then purchase after a timely reminder.

Marketing attribution attempts to draw a straight line through all events, but many of the most persuasive moments happen in private channels or in the real world, where they aren’t measurable signals.

The biggest attribution challenges in digital marketing today

Attribution challenges shift as technology matures and consumption trends change. Here are some of the challenges marketing teams may face today.

Fragmented customer journeys across channels and devices

Customers move between paid, owned, and earned channels, plus web and app experiences and offline moments like store visits, events, word of mouth, and quick conversations with friends or colleagues. Even when every channel reports “success,” stitching those touchpoints into a single story is difficult.

Incomplete or delayed data signals

Some data gaps are expected—customers switch devices, privacy choices limit tracking, and offline moments like store visits or word-of-mouth never show up as clean signals. On top of that, conversions can be delayed or aggregated in platform reporting, so the outcome arrives days later without the touchpoints that shaped it.

Other gaps are platform- and setup-related. If customer identity and events aren’t consistent across web, app, and channels, a journey can’t be stitched together even when the data exists. For example, a customer may click an ad on mobile, browse in the app, and then purchase via desktop—but without strong first-party IDs and reliable event instrumentation, that purchase may get attributed to “direct” or show up with missing context, making it harder to connect engagement to outcomes.

Conflicting metrics across platforms and teams

Every platform has its own definitions and incentives. One team optimizes for clicks, another for installs, another for revenue, and attribution reports end up reflecting organizational structure as much as customer behavior.

Difficulty tying engagement to long-term outcomes like retention and LTV

Most attribution reporting is built to explain one moment—the conversion. But retention and lifetime value build over weeks or months. A welcome series, a helpful in-app message, a loyalty update, and even a support interaction can all shape whether someone sticks around, upgrades, or buys again.

Traditional attribution rarely connects those post-conversion touchpoints to long-term outcomes, so teams end up optimizing for what drives the first conversion, not what keeps customers coming back.

Overconfidence in simplified attribution outputs

Attribution models can produce a neat, easy-to-report number when someone asks about ROI, even when the underlying signals are incomplete. A last click gets “the credit,” or one platform report makes it look like a single channel drove the outcome.

Teams are then directed to shift budget toward those simplified attribution outputs that don’t tell the full story and end up under-investing in the cross-channel journeys, sequencing, and experiences that actually shape retention and lifetime value.

How privacy changes have accelerated attribution challenges

Privacy changes have made marketing attribution harder because fewer customer actions can be observed and linked across sites, apps, and devices. Journeys still happen across channels and time, but measurement has more missing steps between the touchpoint and the outcome.

Third-party cookie deprecation

As third-party cookies disappear, it’s harder to follow behavior across different websites. That breaks the chain between early research and later conversion, especially when someone returns days later through a different route.

Mobile OS privacy changes and consent frameworks

Mobile privacy settings and consent choices mean some ad interactions can’t be tied back to an individual customer’s later actions. A person might see an ad, browse in an app, then purchase on desktop, and those moments may show up as separate, unconnected events. That’s why mobile-heavy journeys often end up with more “unknown” influence in reporting.

Reduced cross-platform visibility

Individual platforms still report performance, but each one tells its own version of the story. It’s harder to reconcile those views into a single picture across paid, owned, and earned channels.

You can still measure performance, but you can’t assume every touchpoint will be visible or linkable.

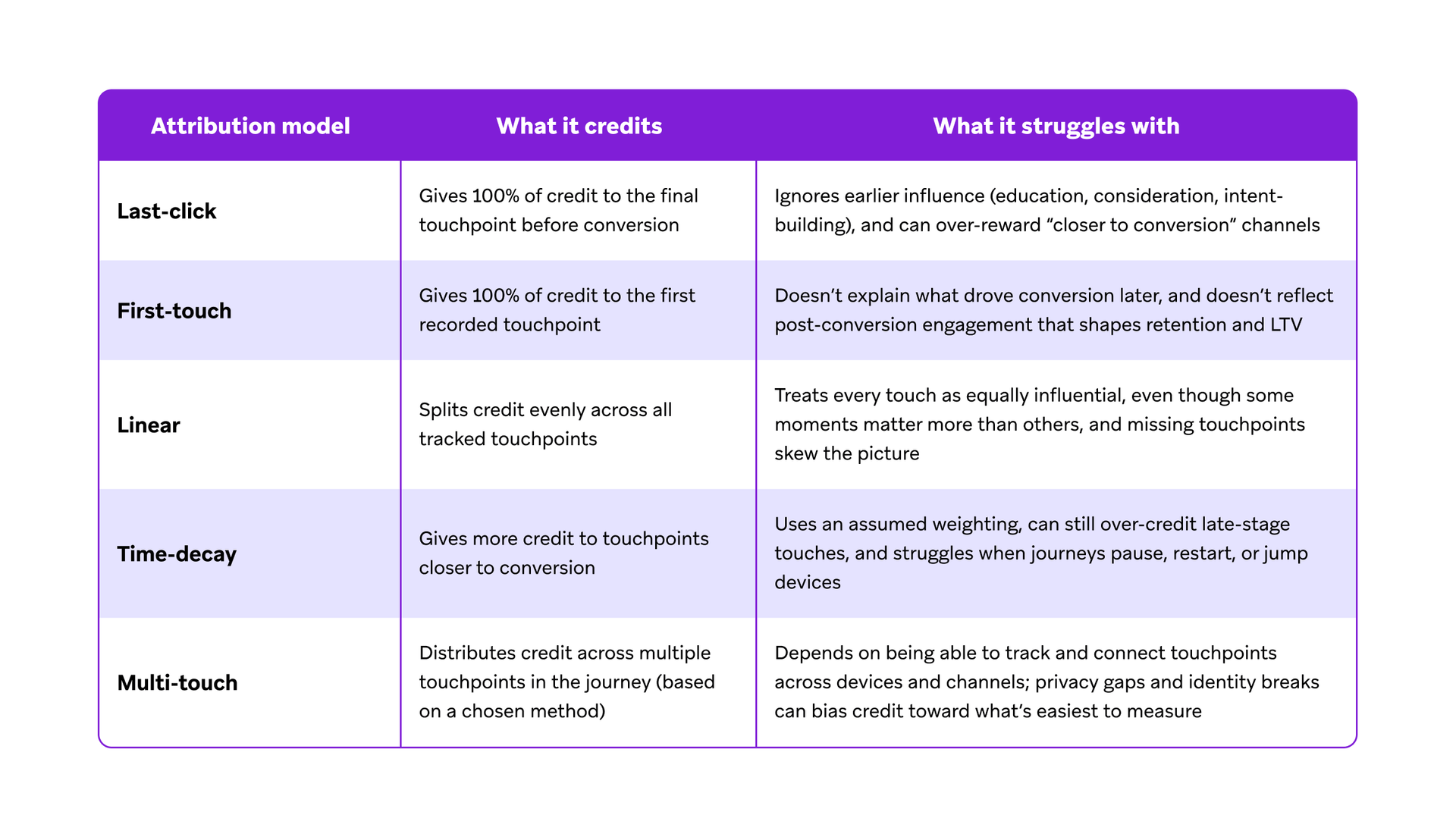

The limits of attribution models and the challenges of multi-touch attribution

Attribution models are ways of assigning credit across touchpoints. They can still be useful, but are built on simplified rules about how influence works. That’s a tough fit for journeys that bounce across channels, devices, and time.

Here’s a quick look at different attribution models and how they work, or fall short.

Last-click

Last-click gives all the credit to the final touchpoint before conversion. It’s easy to explain and easy to report, but it often ignores the earlier messages and experiences that built intent.

First-touch

First-touch credits the first interaction in the journey. It can help teams understand which channels introduce new customers, but it doesn’t explain what moved someone from interest to action, or what kept them engaged afterward.

Linear and time-decay

Linear spreads credit evenly across touchpoints, while time-decay gives more credit to interactions closer to conversion. Both acknowledge that multiple touches matter, but the weighting is still a rule, not proof of what influenced the decision.

Multi-touch

Multi-touch attribution aims to distribute credit across multiple interactions, but it depends on being able to track and connect those interactions. When identity is inconsistent across devices, or consent limits what can be tied together, multi-touch becomes patchy and can still over-value the touchpoints that are easiest to measure.

Across all of these models, the same issues show up. They can miss what really influenced the customer, and they don’t always help teams decide what to change next.

Why attribution breaks without journey-level context

Attribution breaks down when measurement is organized around channels and campaigns, because the customer experience doesn’t happen that way. Brands increasingly want and need to deliver 1:1 experiences based on behavior and context, but traditional attribution still tallies performance in broad buckets that miss how those experiences work together.

One touchpoint often sets up the next. Someone reads an email, then later converts through search. An in-app message answers a question that makes a paid click work. When each channel is judged on its own, that build-up disappears, and the “credited” touchpoint can look like it did all the work.

Attribution is good at describing where a conversion was recorded. It’s less useful for daily decisions like changing the message order, timing, frequency, or next best action for a specific audience. Those choices live in the journey, but attribution reports rarely point to the best next move.

Customers move through stages like onboarding, buying again, renewing, drifting away, and coming back. Measurement that follows those stages makes it easier to see which experiences are helping, and where people are dropping off, so teams can improve the journey rather than debate attribution channels.

How AI and experimentation help address attribution challenges

AI decisioning and experimentation help because they don’t depend on perfect attribution to be useful. And they don’t lump ROI and spend into siloed channels or departments. They create the conditions to spread the focus over personalized customer experiences as a whole. They look at how customers respond, then keep adapting so journeys improve over time, even when some signals are missing.

Finding patterns in incomplete data signals

AI decisioning keeps learning from how each customer behaves, so it can spot what tends to work for different people, even when some touchpoints are missing. For example, if one customer responds to educational content in email and another engages more through in-app messages, AI decisioning can adapt the decisions it makes for each person based on what it learns over time and the goal you set.

Turning measurement into a test-and-learn loop

Experimentation helps because it shows lift, rather than arguing over which touchpoint gets credit. AI decisioning runs ongoing testing across the choices you control, like timing, channel, and frequency, and then updates based on what drives the outcome. That creates a steady learning loop that supports 1:1 experiences at scale, without turning personalization into a manual, and overwhelming, message-by-message exercise.

Optimizing for outcomes, based on what customers actually do

AI decisioning works from observed behavior, not guesses about what “should” work. It learns which actions are more likely to drive the KPI you care about, then adjusts as customer needs and context change. This is especially useful when attribution is incomplete, because you can still improve performance by measuring what increases conversions, renewals, repeat purchase, or lifetime value.

How Braze helps brands navigate attribution challenges

Braze helps marketing teams navigate attribution challenges by linking engagement, testing, and reporting to the same customer context, so decisions don’t hinge on a single attribution view.

Unified customer data activation

Braze brings together first-party data and real-time behavioral signals, so teams can recognize customers across touchpoints and act on a consistent set of events and attributes. That makes it easier to understand what happened before a conversion, what happened after it, and how engagement influences longer-term outcomes.

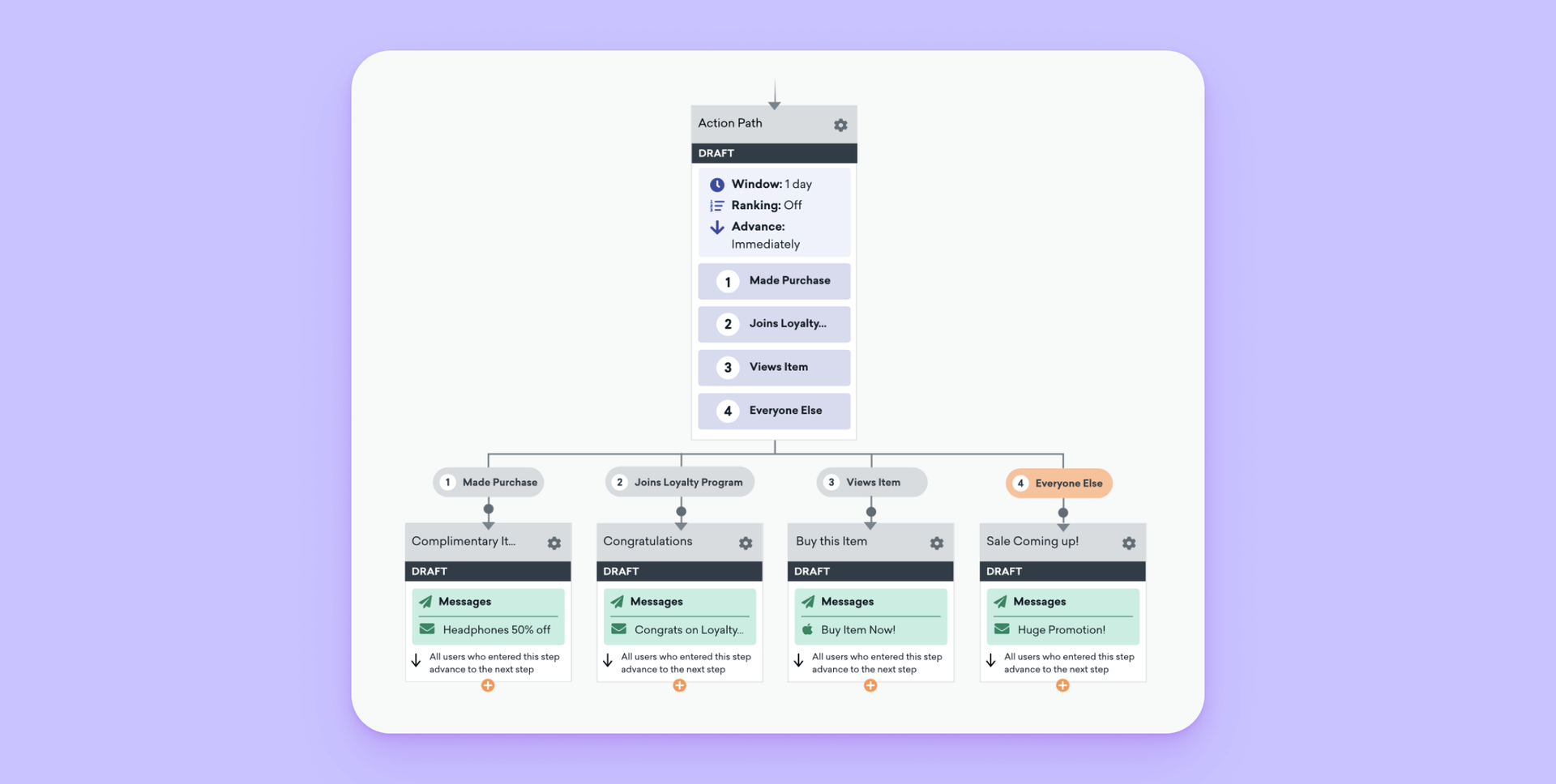

Cross-channel journey orchestration with Canvas

Braze Canvas is a drag-and-drop journey orchestration tool that lets teams build and run cross-channel journeys from one place. Journeys can branch based on what a customer does, pause until they take an action, or switch channels based on engagement. Instead of measuring isolated sends, teams can measure how the full sequence performs over time.

Built-in experimentation and testing

Braze supports testing inside campaigns and Canvas, so teams can compare paths and measure lift. BrazeAITM adds practical tools that help marketers personalize faster, like predictive insights and intelligent selection features that tailor what customers see based on likely behavior. BrazeAI Decisioning StudioTM goes further by running always-on experimentation and learning which choices drive the KPI you set, then adapting decisions as customer behavior changes.

Real-time engagement analytics

Braze Reporting & Analytics are built around live campaigns and Canvas. Teams can monitor performance as it happens, compare results across channels and journeys, and spot where customers are dropping off. That helps teams make changes while the moment is still relevant, instead of waiting for delayed attribution reporting.

Lifecycle and outcome-focused measurement

Because Braze is centered on journeys, measurement maps naturally to lifecycle stages like onboarding, activation, repeat purchase, renewal, churn risk, and win-back. That shared view helps teams align around the same outcomes across marketing, product, and lifecycle teams, then put time and budget toward the journeys that are driving the most impact.

Common problems with marketing attribution

Most problems with marketing attribution come from using it as a final answer, instead of a starting point for better questions. Attribution can be helpful, but it’s rarely complete on its own.

Expecting perfect attribution accuracy

Attribution will always have gaps. Customers switch devices, use private channels, and make decisions offline. Treating every report as “the truth” creates false certainty, and teams end up debating numbers that were never fully observable in the first place.

Measuring channels instead of customer outcomes

Channel reports make it tempting to optimize for channel metrics. Customer outcomes sit elsewhere—in reaching value, coming back, upgrading, renewing, and sticking around. When measurement stays at the channel level, teams can hit performance targets while missing what actually moves retention and lifetime value.

Treating attribution as a one-time setup

Attribution models don’t stay accurate forever. Channel mix and creative change, audiences behave differently over time, and new constraints show up. If attribution isn’t reviewed and updated, teams end up making decisions based on outdated conditions.

Ignoring experimentation in favor of reports

Reports can’t provide the full picture, and they lose relevance quickly as conditions change. Experimentation fills many of the gaps by testing what happens when you adjust timing, messaging, audience rules, or the journey path, then measuring how outcomes move. Because testing can run continuously, teams get fresher feedback to support day-to-day decisions.

Key takeaways on marketing attribution challenges:

Attribution can add context, but it shouldn’t be the only standard for what “worked.” These are the things to keep your eye on in 2026:

- Attribution is harder because journeys are more complex. Customers move across channels, devices, and time, and many influential moments never show up as trackable signals.

- Privacy has reduced visibility, not insight. Teams can still learn what’s working by leaning on first-party data, stronger lifecycle reporting, and testing.

- Models alone are insufficient. An attribution model can assign credit, but it won’t reliably tell you what to change next.

- Better measurement comes from unified data, experimentation, and marketing orchestration. When engagement and measurement run from the same customer context, it’s easier to improve performance without chasing perfect attribution.

Related Tags

Releated Content

View the Blog

Welcome email examples: Proven series, best practices, and personalization

Team Braze

A complete guide to customer engagement strategy for 2026

Madison Tiemtoré

Challenges of marketing attribution: Why it’s becoming harder to measure impact