The Ultimate Guide to Data Streaming Technologies

Published on March 25, 2025/Last edited on March 25, 2025/18 min read

Team Braze

Often brands have the data they need to give customers what they want when they want it—but many struggle to use it effectively. Building personalized messaging across different channels is often resource-intensive, especially when campaign data isn’t managed in one dashboard. Data sits unused in complex pipelines, or isn’t tapped until it’s already out of date, causing brands to work off of incomplete views of customers. Thankfully, there’s a better way.

In this guide to all things data streaming technologies, we’ll explore:

- What Is Data Streaming?

- Batch Processing vs. Stream Processing: What’s The Difference?

- What Are Data Streaming Technologies?

- Why Are Data Streaming and Data Streaming Technologies Important?

- Advantages of Stream Processing vs. Batch Processing for Marketers

- Data Streaming Technologies Use Cases

- 3 Examples of Real Brands Leveraging Data Streaming Technologies

- What to Look for When Considering Data Streaming Technologies

- Final Thoughts

- Data Streaming FAQs

Let’s get started.

What is data streaming?

Data streaming processes data in real-time as soon as it gets generated, rather than waiting to process it in batches, such as daily or weekly.

It is a more modern approach to processing data that speeds up the time to insight and action, helping businesses make faster data-driven decisions and better fuel marketing campaigns for higher customer engagement. In the past, many brands uploaded data in batches at specific times, leading to outdated experiences.

Data streaming is increasingly essential for any organization that needs to act on immediate customer insights. For example:

- eCommerce: Real-time inventory updates and personalized recommendations based on current customer behavior

- Finance: Notifications with accurate info on account information

Batch Processing vs. Stream Processing: What’s The Difference?

What is batch processing?

As the name suggests, batch processing is a type of data processing that handles the information in batches. This is done by bundling data together at set windows of time, such as every 24 hours, or when a certain threshold of data has been accumulated. As a result, this type of data processing introduces delays and has latency built in.

This means any insights you gain from batch-processed data are already out of date. That’s not an issue if you are using the data to spot historical trends or for reporting purposes, for example, but if you need to act fast on your data for competitive and commercial advantage, batch data is more of a hindrance than a help.

What is stream processing?

Stream processing—aka data streaming or unbounded data—processes data in real-time, on an ongoing basis. Each unit of data is processed as soon as it arrives, providing a live view of the interactions a customer has with a brand.

This means you have an up-to-date picture of how users are engaging with your brand—like your website, app, messages, social media, and ads. As a result, you can tailor your messages based on their current behavior, wants, likes, and interests, before they move on to something new.

What are data streaming technologies?

Data streaming technologies are software tools that are built to handle live data flows. They receive, store, and process data as it is captured—allowing businesses to act on the recent and relevant data and insights.

This type of tech typically consolidates live data streams from multiple sources—such as your website and app analytics, CRM activity, social media data, email open rates, etc—to create a single source of truth about your customer activities. It builds into a 360-degree customer profile that is up-to-date with their latest interactions with your brand. Imagine that!

Additionally, data streaming platforms often support two-way synchronization, meaning they can send data in real-time, as well as receive it. This means that once you’ve processed the data, you can act on it right away to deliver personalized content back to individual customers, based on their live behavior.

Why are data streaming and data streaming technologies important?

Data streaming technologies help brands more effectively analyze and act on their customer data.

By consolidating live data from different sources, data streaming platforms help marketers de-silo their customer data platforms and create a single source of actionable consumer insights.

This unlocks the ability to create truly personalized marketing campaigns at scale, as well as create cohesive cross-channel journeys that engage customers wherever they access your brand.

Advantages of stream processing vs. batch processing for marketers

For marketers who are looking to move quickly, stream processing can deliver better outcomes than batch processing.

Customers continue engaging, browsing, and shopping between batch processing periods. This makes any batch-processed data potentially obsolete before you’ve even had time to act on it. For example, if you are an eCommerce brand that is batch processing every 24 hours, the data you have at hand might suggest that your customer has abandoned cart after adding three items in it. While you send that customer through a campaign flow to complete purchase, the user might actually have spent more time browsing and completed the purchase already. The message to complete a purchase after already doing so might annoy your customer.

Act on old data and you could end up frustrating and confusing customers—like offering a discount on something they’ve already bought or recommending content they’ve already consumed (and told you they disliked.)

Here’s how live data translates into better marketing and happier customers.

- Real-time insights: Streaming data lets you act on live customer behaviors, like automatically triggering offers when a user demonstrates signs of buying propensity or risk of churn (check out Data Automation for Marketers for more.)

- Advanced personalization: Live data means your personalization efforts are up-to-date, reflecting customers’ latest preferences, purchases, and interests

- Faster decision-making: Marketers can adjust campaigns or experiments in response to live feedback, rather than waiting for batched updates, improving performance and outcomes.

All of this can drive deeper customer engagement, improve outcomes across conversions and retention, and deliver commercial value for your business.

Data Streaming Technologies Use Cases

1. Acting on customer behavior and preferences with real-time marketing personalization

Sending a post-purchase message asking customers to rate items is a great way for providers to strengthen customer engagement, but only if the customer actually makes the purchase and doesn’t return the item.

The greater the time delay between when data is generated and when it’s available to be used for marketing purposes, the greater the chances of negative consequences for customer engagement efforts. That’s particularly true when customers take actions, such as completing a conversion or moving from one segment to another, between when the data is generated and when it is processed and put into action. Platforms like Braze can help brands avoid data latency issues and avoid sending dated campaigns powered by inaccurate or incomplete data.

2. Democratizing access to your company’s data

One of the benefits of live-streaming data is that it can provide intuitive tools and insights for everyone. Once the initial system configuration is completed, a platform like Braze can enable non-technical team members to access and act on holistic customer data.

For example, Papa John’s UK used Braze to empower franchise owners to create their own personalized campaigns in their local communities, with a simple user interface that lets them segment and message customers based on past purchases and preferences.

3. Unlocking better, more accurate data analysis

Not only is accessing large-scale data sets in real-time easier than ever, so is making sense of it all. The right data streaming technologies offer up-to-the-minute analytics and reporting to understand the impact of marketing strategies across platforms and channels.

With Braze reporting and analytics tools, marketers can visualize this stream of real-time data through intuitive, customizable reports and dashboards without requiring technical expertise. These tools allow brands to compare many campaigns, analyze performance across channels, and quickly identify optimization opportunities—all while maintaining a single source of truth for customer engagement metrics that can be shared across teams.

For example, Braze Currents can stream insights—such as customer behavior events and messaging engagement events—to brands’ analytics platforms to support large-scale data analysis to optimize campaign performance and customer outcomes.

3 Examples of Real Brands Leveraging Data Streaming Technologies

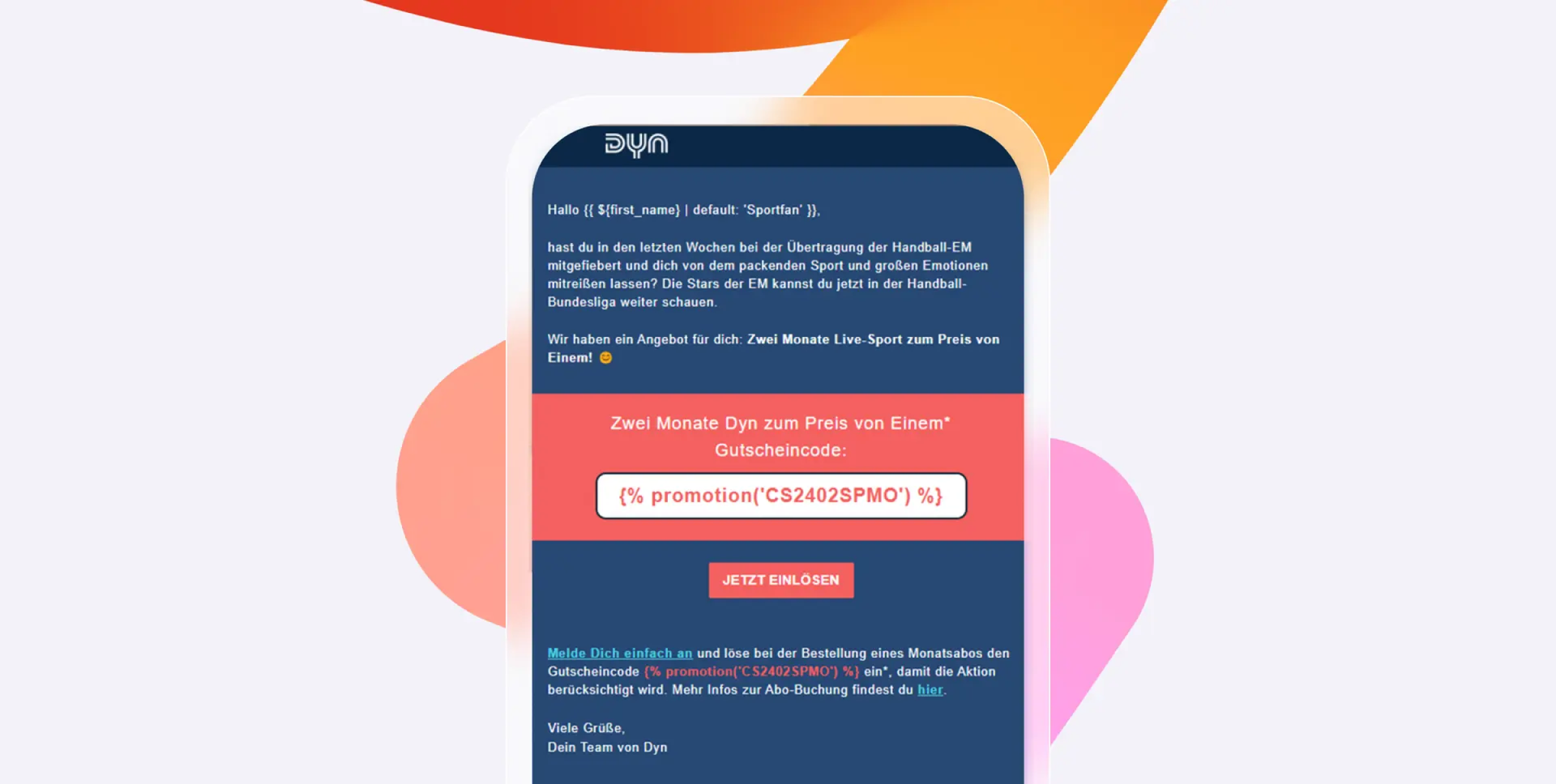

1. Dyn Media improves customer retention with the Braze Data Platform

Dyn Media, part of Axel Springer, is a premier sports content platform in Europe, created by sports fans for sports fans, with a mission to inspire and connect people through genuine, high-quality content. Committed to long-term relationships and innovation, Dyn Media focuses on customer engagement to foster a sense of community among fans, offering personalized and authentic experiences that maximize customer lifetime value (CLV) and enhance user satisfaction.

Dyn Media utilized Braze to implement personalized email campaigns aimed at re-engaging lapsed customers and offering subscription extensions to loyal users. By leveraging Snowflake’s seamless data integration with the Braze Data Platform, they achieved precise audience targeting and conducted effective A/B testing. These personalized win-back and extension campaigns significantly enhanced customer retention and engagement, leading to higher re-engagement rates, successful subscription extensions, and ultimately increased customer satisfaction and lifetime value (CLV):

- 10% re-engagement rate from winback campaigns

- 80% unique open rate for the extension campaign

- 75% of target users extended their subscription (after three waves)

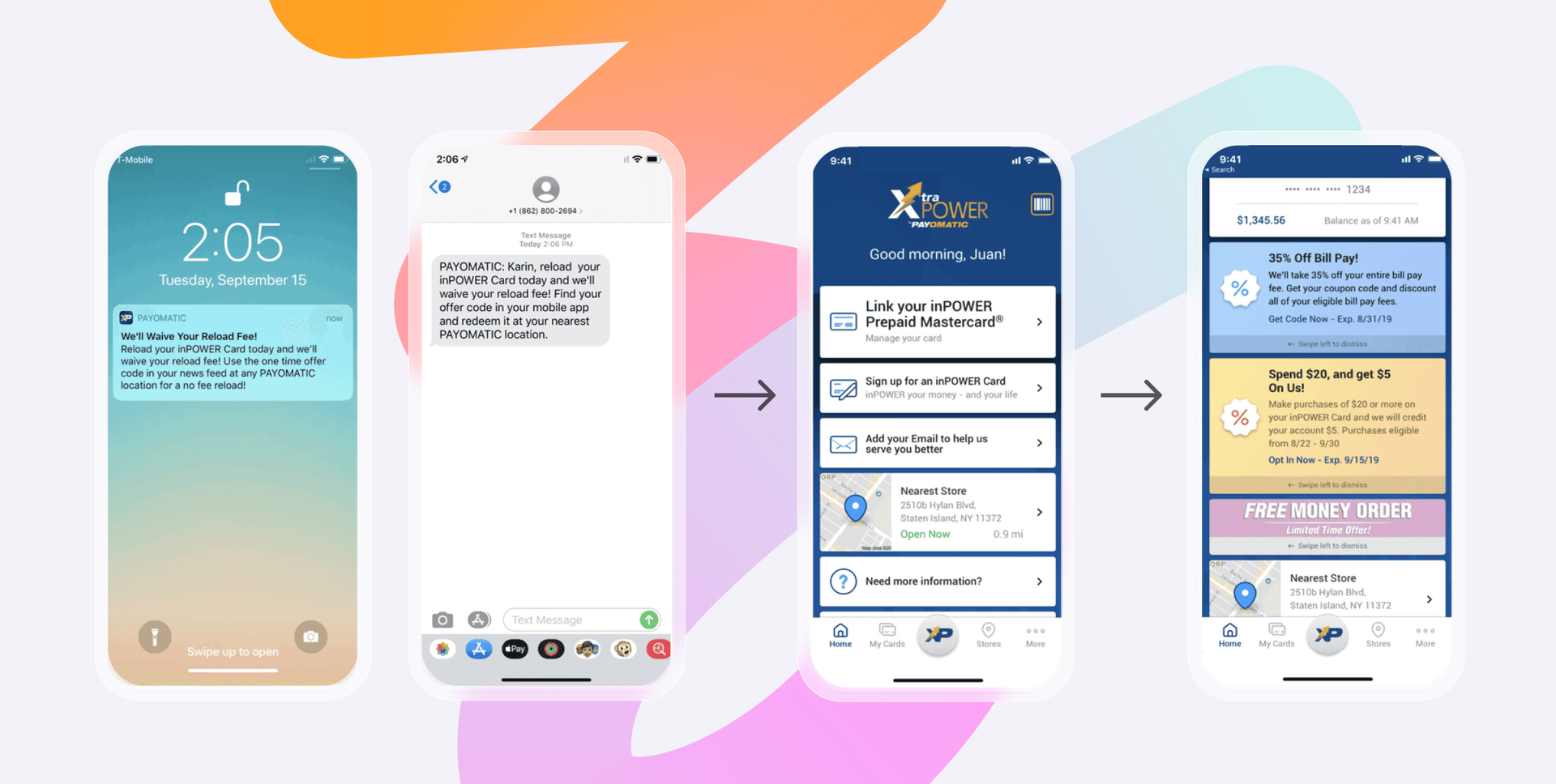

2. Payomatic Uses Braze + Snowflake to Power Real-Time Personalization

Payomatic, New York’s largest provider of check cashing and financial services, leveraged Braze and Braze Alloys technology partner Snowflake to unlock a cloud-based 360-degree customer view. By ensuring their data was actionable, they were able to reach customers at the right stage in the customer journey with personalized marketing campaigns that have delivered stronger results, including:

- 50% increase in prepaid cardholder mobile app penetration

- 32% uplift in direct deposit via the app

- 11% increase in mobile app engagement

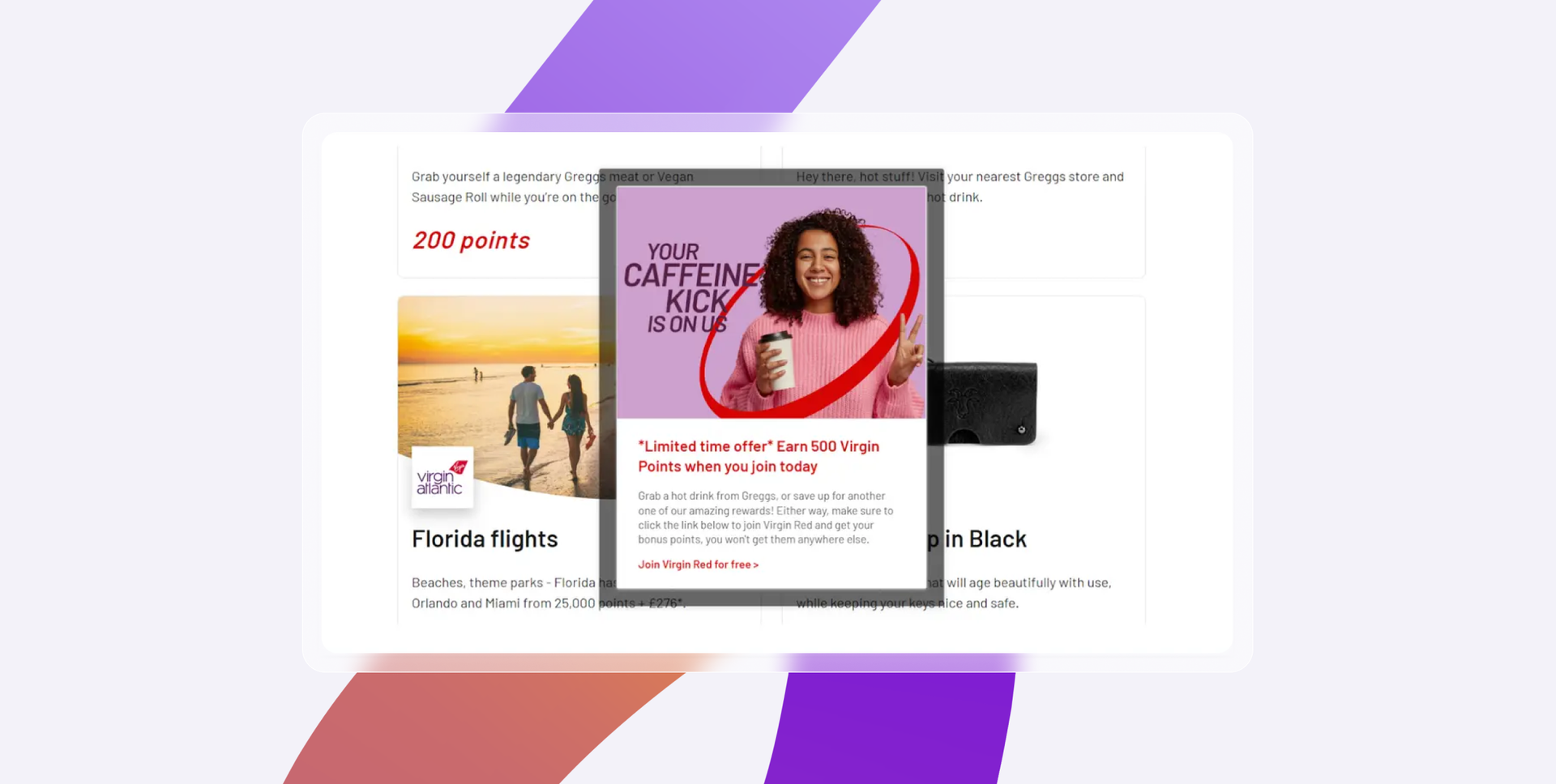

3. Virgin Red Leverages Data Streaming for Timely, Data-Driven Personalized Marketing Campaigns

Before partnering with Braze, the marketing team at Virgin’s rewards club, Virgin Red, relied on disconnected customer engagement tools that created time-consuming data management and analysis challenges.

To streamline operations, Virgin Red turned to Braze Cloud Data Ingestion (CDI) to get the data they needed for their campaigns, without constant reliance on engineering resources.

Now ingesting member attributes (location, points balance, product favorites, etc.) can be set up in a few clicks.

What to look for when considering data streaming technologies

1. Streaming Data Integration Capabilities

For marketers, data streaming platforms work best when they're integrated with other tools in your tech stack. For example, integrating with:

- Data warehouses: To store and analyze large volumes of data for long-term trends and insights

- Business Intelligence tools: To generate real-time reports and dashboards, helping marketers make data-driven decisions quickly

- Customer Data Platforms: To create unified, up-to-date customer profiles, ensuring personalized marketing messages across channels

- Customer Engagement Platforms: To trigger personalized campaigns instantly based on live customer behavior and actions

Braze integrates with a variety of partners, including data warehouses like Amazon Redshift, Snowflake, Google BigQuery, and Microsoft Fabric, BI tools like Looker, analytics platforms like Amplitude, data lakehouses like Databricks, and customer data platforms (CDPs) like mParticle and Segment.

As a result, you can stream, analyze, and automatically act on live customer data, to maximize customer engagement in the moment. Check out the next section for more details on the highly productive Braze x Snowflake partnership.

2. Real-Time Streaming

The phrase “real-time data” gets thrown around a lot, but many platforms use it to refer to a type of data processing that isn’t actually live. Processing happens every few hours or even just once per day. True real-time streaming happens in the moment.

3. Two-Way Synchronization

Two-way synchronization means your system doesn’t just receive data in real time, it can send it too. For example, you receive data about a product a customer has bought and, in response, you can surface relevant product recommendations to them. Two-way sync means you aren’t just getting insights, you can also act on them.

4. Support for Both Speed and Scale

As important as it is to process data without delays, that speed isn’t enough on its own. Your tech stack must also be able to handle massive scale.

At Braze, our systems have been built to support truly epic scale—we supported more than 50 billion messages during the four-day Black Friday/Cyber Monday period in 2024, breaking our previous records for send volumes during this period. That included 13.9 billion messages sent on Black Friday and 13.2 billion messages on Cyber Monday.

Final Thoughts

By processing data in real-time, businesses can gain immediate insights into customer behavior, enabling them to deliver personalized experiences that resonate with their audience. This shift from traditional batch processing to stream processing not only improves the accuracy and relevance of marketing efforts but also empowers brands to act swiftly on customer interactions, ultimately driving higher engagement and satisfaction.

Embracing these technologies will not only streamline operations but can also foster deeper connections with customers, paving the way for sustained growth and loyalty.

Data Streaming FAQs

What’s unbounded data?

Unbounded data is another way to refer to data streaming or the process of handling each unit of data individually as it comes in, enabling ongoing, real-time data processing across systems.

What’s microbatching?

This is a type of data processing that lands somewhere in between traditional batch processing and data streaming, with the data being processed in a series of small batches at a more frequent interval than batch processing.

What is data latency?

Data latency is the time delay that happens between when data gets created and when it’s available to be used.

What are the key benefits of data streaming for customer engagement?

Data streaming provides marketers with live, real-time data, which lets businesses act in the moment instead of waiting until it is (possibly) too late. This can increase customer engagement and satisfaction, and increase loyalty and revenue.

For example, imagine a customer has filled their cart on your ecommerce site but hasn’t checked it out. With streaming data, that behavior can be identified immediately and trigger outreach, such as an email or SMS, to encourage the customer to complete their purchase. It’s about striking while the iron is hot to re-engage the customer.

If you only had access to batch-processed data, you might not identify the abandoned cart for days, by which time the customer may have lost interest in buying from you or shopped elsewhere.

It isn’t just about abandoned carts and eCommerce. Live streaming data has numerous benefits. Such as being able to offer better customer support because you can see up-to-date information about how a customer has been interacting with your services. Or adjusting A/B experiments in real-time based on live customer interactions.

How does data streaming improve the personalization of customer experiences?

Data streaming improves the customer experience through enhanced personalization. By using live data, businesses can personalize their interactions with customers in multiple ways – from content to timing and device, making their messaging more relevant and engaging.

For example, marketers can use live data about consumer behavior to tailor content to their most recent interactions and interests.

Imagine a streaming service serving up on-demand entertainment. Real-time streaming data provides the business with up-to-date insights into what each viewer is watching at that moment.

Are they interacting with true crime documentaries or rom-coms? This live information lets the service curate a more relevant list of recommendations for their next watch, increasing the likelihood of engagement.

Or what if the viewer abandoned a series after episode 3? Streaming data would let the provider reach out with a message about a surprising development in episode 4 to entice them back. And allow them to send the message at a time they know that viewer typically engages with their service and on the device they engage with most to further increase engagement.

Similarly, an eCommerce store can use live streaming data to surface relevant recommendations based on a customer’s most recent purchase, such as gloves to go with a scarf they’ve bought or products linked by color or style. It can also inform inventory information, to suppress the promotion of items that aren’t in stock, saving customers frustration.

What industries benefit most from real-time data streaming?

Data streaming is highly beneficial in businesses that need—or want—to act on instant feedback. Here are a few examples of industries that most benefit from streaming data, and how they use it.

- eCommerce: Personalized shopping experiences and real-time inventory updates

- Finance: Account updates, fraud detection

- Media and entertainment: Personalized content recommendations, notifications about new series

- Logistics: Delivery tracking and order updates, route optimization

- On-demand rides: Dynamic ride matching and real-time vehicle tracking

- Health and social care: Remote patient monitoring

Having real-time streaming data lets these businesses make decisions based on live behavior to improve the customer experience.

How does data streaming support cross-channel marketing efforts?

Modern consumers interact with your brand across multiple channels, like your website, app, email, SMS, and WhatsApp. To have an actionable understanding of their engagement with your brand, you need to know what they’ve been doing on every channel. And to avoid any brand-damaging faux-pas you need to know in real-time.

Why? Imagine a customer has clicked through to your website, added a product to their cart, and then completed the purchase. If your system doesn’t integrate real-time data, you might send them a follow-up email an hour later, asking them to complete their purchase—when in reality, they've already bought the product. Embarrassing!

Using a platform like Braze, marketers can consolidate all of the data from different sources into a 360-degree profile of each customer. This means you have an up-to-date picture of customer behavior as they interact with your brand.

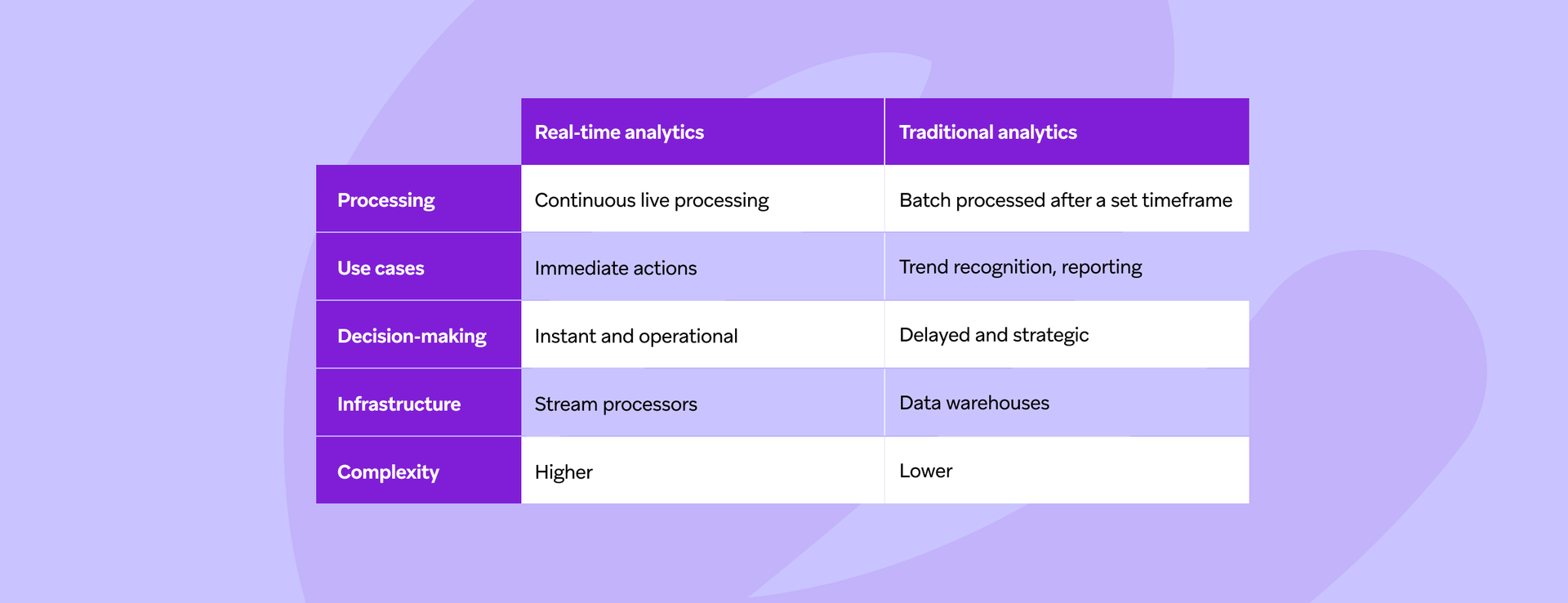

How does real-time data analysis differ from traditional analytics?

Real-time analytics differ from traditional analytics because they happen instantly, as soon as data is collected, generating immediate insights that can be actioned straight away.

In traditional analytics, data is processed in batches after a certain timeframe has passed. The insights are still sound but they’re less timely.

If a business relies on quick insights and decision-making, real-time data analytics are better.

Why is two-way synchronization essential in data streaming platforms?

Two-way synchronization means that information flows in both directions—data can be sent and received at the same time. This is important because it keeps everything up-to-date and working smoothly in real time.

For example, in a customer engagement system:

- When a user interacts with the platform (like clicking on a product), the system receives that data immediately.

- At the same time, the system can send a personalized response back to the user, like recommending similar products or offering a discount.

This back-and-forth exchange can help ensure the system has the latest data, gives customers instant feedback, and helps businesses respond quickly to user actions.

How can small businesses use data streaming for customer insights?

Small businesses can use data streaming to monitor customer interactions in real time and improve their customer experience, using that to boost satisfaction, loyalty, and customer value.

Thanks to customer engagement platforms like Braze, this technology is more accessible to small businesses than ever. For example, a local retailer could use streaming data to:

- Personalize customer journeys: Use live data to personalize the exact path a customer goes down, making each journey relevant to each user

- Optimize your campaign send times: If streaming data shows that customers are more likely to engage with your emails at a certain time and SMS at another, you can use intelligent timing tools to automatically send messages at the time a user is most likely to engage

- Engage high-value customers: If your live data reveals certain customers have engaged with your brand several times in a week, you could invite them to join your loyalty program or an exclusive in-store event

These are just some of the ways that data streaming and customer insights can improve engagement and boost revenue, as well as help your limited marketing budget have the highest impact.

How does Braze integrate data streaming within its platform for marketers?

Braze is built using a stream processing infrastructure to allow marketers to segment customers, trigger messaging, and personalize content based on real-time behavior.

Braze directly integrates with your organization’s data warehouse, customer data platform, digital properties – such as your website and app – and any other software that collects customer data. It then consolidates this data into holistic 360-degree customer profiles, so wherever you get your data, you can see and act on it from Braze.

- Stream processing infrastructure: Connects all of your data sources for a single source of truth

- Personalization at scale: Delivers dynamic personalization through the use of live data

- Event-based triggers: Processes live data to trigger timely messaging

- Cross-channel approach: Integrates all your messaging channels for joined-up customer comms

- Analytics: Provides actionable insights such as predicting churn or purchase intent

(If you want to get technical, read about our webhooks, SDKs, and APIs on the Braze data platform page).

Braze then provides the tools to segment customers based on that data, build messaging flows to guide them through high-value actions, and trigger automatic messages at relevant points in their journey.

Braze also has tools to design and send messages from your full range of customer communication tools—including email, SMS, WhatsApp, in-app messaging, and more. And intuitive testing tools to experiment with new approaches.

Forward-Looking Statements

This blog post contains “forward-looking statements” within the meaning of the “safe harbor” provisions of the Private Securities Litigation Reform Act of 1995, including but not limited to, statements regarding the performance of and expected benefits from Braze and its products. These forward-looking statements are based on the current assumptions, expectations and beliefs of Braze, and are subject to substantial risks, uncertainties and changes in circumstances that may cause actual results, performance or achievements to be materially different from any future results, performance or achievements expressed or implied by the forward-looking statements. Further information on potential factors that could affect Braze results are included in the Braze Quarterly Report on Form 10-Q for the fiscal quarter ended October 31, 2024, filed with the U.S. Securities and Exchange Commission on December 10, 2024, and the other public filings of Braze with the U.S. Securities and Exchange Commission. The forward-looking statements included in this blog post represent the views of Braze only as of the date of this blog post, and Braze assumes no obligation, and does not intend to update these forward-looking statements, except as required by law.