AI A/B testing: Smarter experiments for real-time marketing optimization

Published on October 30, 2025/Last edited on October 30, 2025/13 min read

Marketers often wait weeks for A/B test results, only to find the winning variant is already outdated. Customer behavior shifts faster than traditional testing can keep up.

While applying AI to A/B testing can enhance aspects of the A/B testing process, the truth is that AI changes the pace of experimentation—to the degree that A/B testing can be replaced by other real-time methods of experimentation and personalization, redirecting energy toward what’s working while phasing out what’s not. More advanced AI applied to experimentation evolves alongside your customers, across every channel.

In this guide, we’ll explore what AI A/B testing means, the benefits and limitations, and what it takes to run AI-powered tests responsibly. We’ll also look at how Braze helps teams bring continuous, AI-driven optimization into their marketing strategy.

Contents

Why marketers need AI A/B testing

Limitations and guardrails in AI-driven A/B testing

Real marketing use cases for machine learning A/B testing

A seven-step framework for running AI A/B tests responsibly

How to choose the right AI-driven A/B testing tools and platform

Final thoughts on AI A/B testing

AI A/B testing FAQs

What is AI A/B testing?

AI A/B testing, sometimes known as AI split testing, applies machine learning to make traditional A/B testing experimentation more adaptive and continuous. Rather than waiting weeks for a final result, AI systems can optimize while tests run. AI-powered (or AI-assisted) A/B testing provides marketers with a variety of tools and tactics they can use to speed up this fundamental form of experimentation, which has long been used to compare different versions of a message, design, or experience by splitting an audience and tracking which version performs best. A/B testing has been a reliable way to learn what resonates, but the process remains static and slow compared to other methods of experimentation such as multi-armed bandit (MAB) testing, multivariate testing (MVT), and reinforcement learning.

That said, AI A/B testing can:

- Reallocate traffic to higher-performing variants in real time

- Generate and test multiple versions of copy, creative, offers, or timing

- Maximize outcomes by combining with other experimentation techniques

- Keep experiments running continuously, avoiding the “winner plateau” of traditional tests

This creates a faster, more flexible approach to experimentation.

Why marketers need AI A/B testing

Traditional A/B testing was designed for a slower environment—one where customer behavior stayed relatively stable between the start and end of a test. That’s no longer the case. Preferences shift daily, and digital interactions generate more signals than manual tests can handle.

AI A/B testing aims to respond closer to the pace that customers move. AI enables experiments to adapt, which makes it possible to explore more complex scenarios—from channel combinations to timing patterns—without slowing campaigns down.

Benefits of AI A/B testing

Key advantages of AI A/B testing include:

- Faster analysis: AI-powered systems can process batched or streaming data quickly to highlight performance patterns or change parameters while experiments are still running. This compresses testing cycles from weeks into days or even hours.

- Higher accuracy through anomaly detection: Machine learning reduces the impact of human bias and spots unusual results that might distort an experiment. This gives clearer, more dependable insights across complex tests.

- Predictive capabilities: By combining historical and live data, AI can anticipate likely outcomes and adapt experiments proactively. Campaigns stay aligned with customer behavior instead of reacting after the fact.

- Personalization from segments to individuals: AI can test and refine variants for micro-segments or even single customers. Creative, offers, and timing are matched to live signals, making each interaction more relevant.

- Ongoing optimization without plateau: Traditional tests stop once a winner is declared. AI-driven tests keep evolving, so campaigns improve continuously and results build over time.

- Scale of experimentation: AI can manage testing at a level impossible for human teams, running thousands of variations across channels in parallel. This gives marketers a much wider view of what drives performance.

AI A/B testing offers a powerful set of capabilities, but it also introduces new complexities and considerations. So the next step is to look at its challenges and what marketers need to have in place to use it responsibly.

Limitations and guardrails in AI-driven A/B testing

For many teams, the idea of AI-driven testing raises questions about control. If models are running experiments automatically, how do you know the results align with your brand or your strategy?

It’s all in the system design. The strongest AI testing platforms are built with transparency and guardrails in mind. They operate within boundaries set by marketers—drawing on approved content, observing frequency caps, respecting compliance standards, and only testing within the parameters you define. For example, guardrails prevent testing messages in breach of privacy laws such as GDPR. AI agents handle the complexity, but human judgment still defines success.

AI experimentation becomes a partner, reallocating traffic in real time, spotting anomalies, and detecting insights that help you refine strategy. This balance—automation guided by accountability—is what makes AI-driven testing sustainable.

How AI A/B testing works

AI takes on the heavy lifting of experimentation, automating what would otherwise be slow, manual tasks. Key responsibilities include:

- Idea generation: Proposing test ideas drawn from patterns in historical performance and customer data.

- Variant creation: Creating and managing multiple versions of copy, creative, offers, and timing for testing.

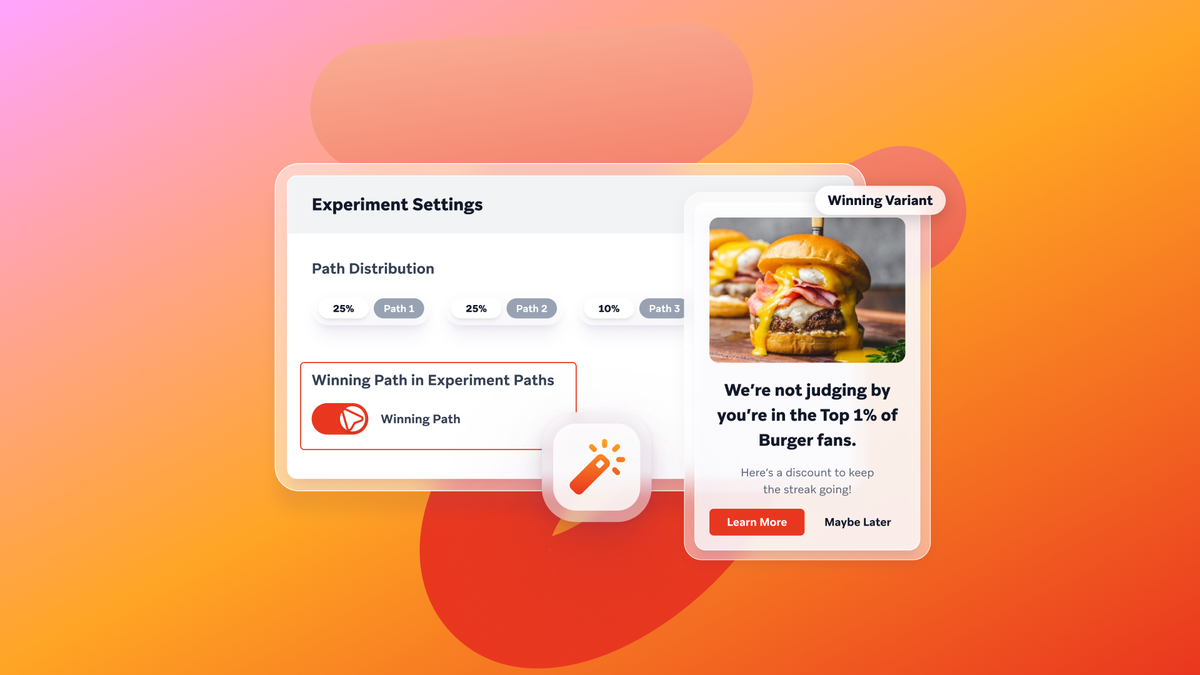

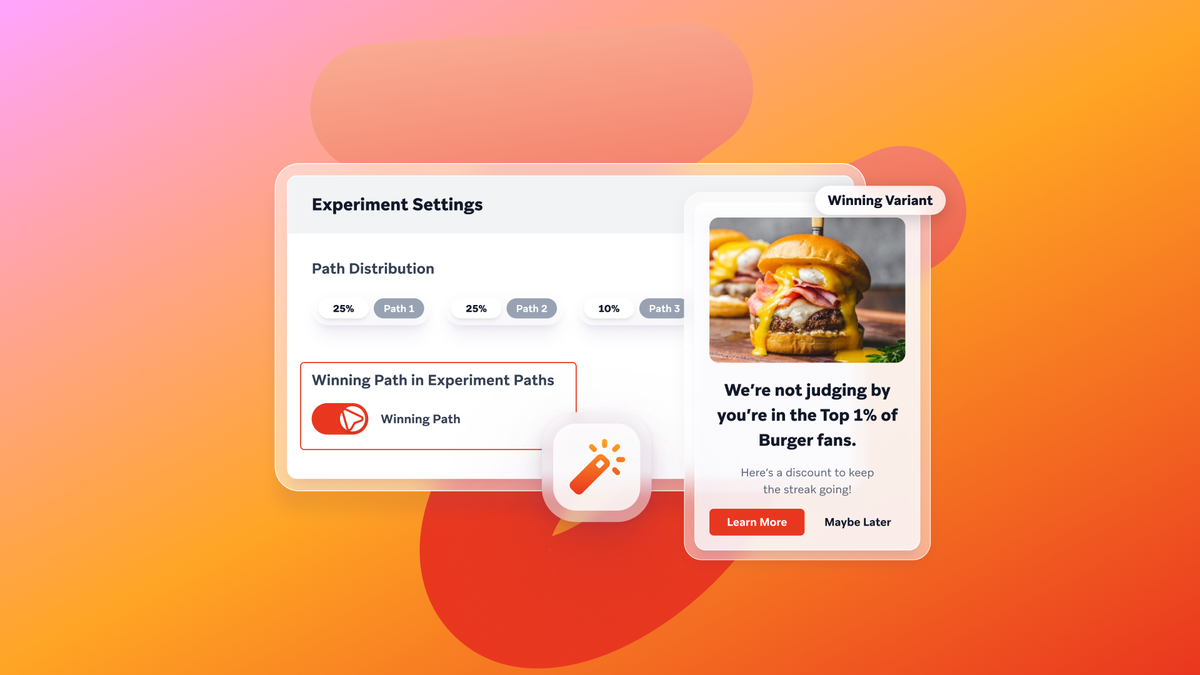

- Traffic allocation with multi-armed bandits: Directing more traffic toward stronger-performing variants while still testing alternatives.

- Measurement and reallocation: Tracking results continuously and adjusting distribution as customer behavior evolves.

Real marketing use cases for machine learning A/B testing

AI-driven experimentation comes to life when applied to real campaigns. From offer personalization to send-time optimization to lifecycle flows, brands across industries are using Braze to test, learn, and adapt in real time. The following case studies show how companies are turning AI experimentation into measurable business wins.

Offer personalization drives ROI for Too Good To Go

Too Good To Go connects users with local restaurants and stores offering discounted “Surprise Bags” of unsold food—saving over 300 million meals from going to waste. They put offer personalization to the test, experimenting with discounts versus value-add messages to see which resonated with different segments and individuals.

The problem

With limited supply and location-specific availability of the Surprise Bags, generic offers often failed to convert. Messages that weren’t relevant to a user’s preferences or geography created drop-off and churn risk.

The solution

With Braze, the team combined customer preferences and behavior with live supply data to run AI-driven split tests on different approaches. They compared discount-led outreach against value-add notifications, like nearby availability alerts or themed campaigns using Braze Catalogs and Connected Content. This form of AI multivariate testing allowed Too Good To Go to scale personalization while keeping each message timely and relevant.

The results

The personalization experiment paid off. Message conversion rates doubled, and purchases attributed to CRM campaigns increased by 135%. By embedding online experimentation into their engagement strategy, Too Good To Go turned more app sessions into completed purchases and reinforced their mission to reduce food waste.

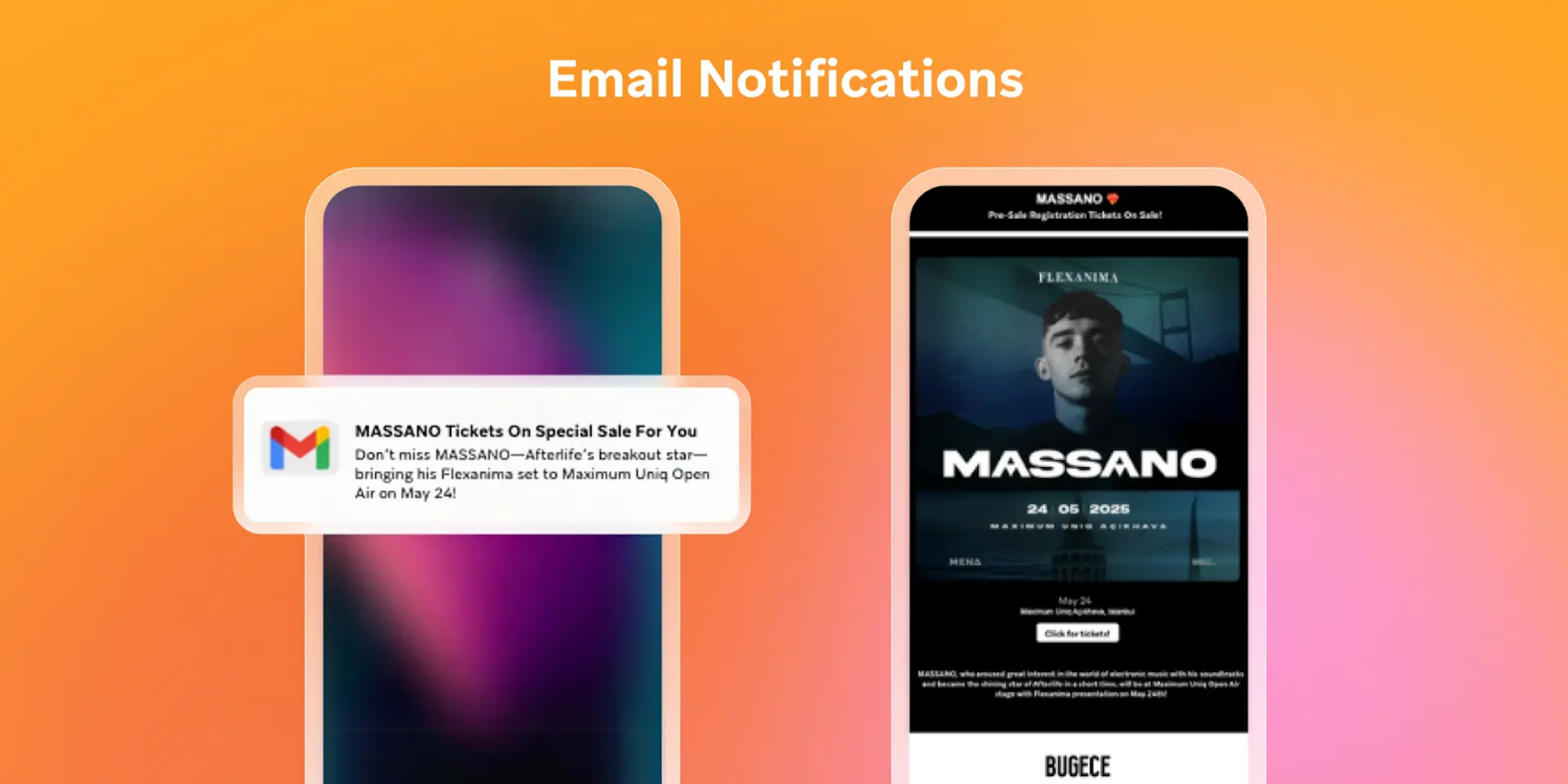

Smarter timing drives results for BUGECE

When it comes to customer engagement, when you send a message can be just as important as what you send. Send-time optimization powered by AI A/B testing helps brands experiment with timing windows in real time, learning when customers are most likely to engage and adjusting automatically. BUGECE, a music and events platform, put this into practice with Braze to boost signups and open rates.

The problem

Before adopting Braze, BUGECE relied on four separate tools to manage communications. This fragmented setup made it difficult for their small team to personalize timing or coordinate across channels. Without an AI A/B testing framework in place, engagement was inconsistent and conversion rates lagged behind expectations.

The solution

By consolidating onto Braze, BUGECE gained access to orchestration and BrazeAI™ capabilities like Intelligent Timing. This allowed them to test and optimize the exact moment messages were delivered, aligning push notifications, email, and in-app messages with each user’s behavior patterns. Campaigns dynamically queued messages for post-work hours—the peak ticket-buying period—while fallback logic made sure every user still received a relevant message at their next-best time.

The results

With Intelligent Timing running in the background, BUGECE saw a 63% increase in email open rates and a 32% lift in signup conversion via in-app messaging. Treating timing as a variable within AI A/B testing not only improved the user experience but also gave BUGECE deeper insights into user intent signals, helping them design future campaigns with greater precision.

Panera personalizes every bite with cross-channel orchestration

AI-powered decisioning shows its real value when campaigns span the entire customer journey. Panera Bread, a leading fast casual restaurant, put this into practice during the largest menu transformation in its history, using Braze to keep guests engaged while introducing more than 20 updated and new items.

The problem

Panera needed to launch new menu items without losing loyal customers, while also reactivating lapsed guests and building excitement—all across multiple channels, from email and mobile push to in-app messages and Content Cards.

The solution

With its third-party AI-driven decisioning integrated with Braze capabilities, Panera coordinated campaigns across channels in real time. Messages dynamically matched offers and timing to customer segments—for example, sandwich lovers saw new sandwich launches, while frequent drink buyers received Sip Club nudges. Pre-launch, loyalty members voted on new menu items, giving Panera both engagement and valuable preference data.

The results

The campaign delivered millions of personalized, cross-channel interactions and saved over 50 hours of manual work. Outcomes included:

- 5% retention lift among at-risk guests

- 2X increase in loyalty offer redemptions

- 2X conversion lift on purchase campaigns

By orchestrating personalization across channels, Panera turned a high-stakes menu transformation into a loyalty-building opportunity.

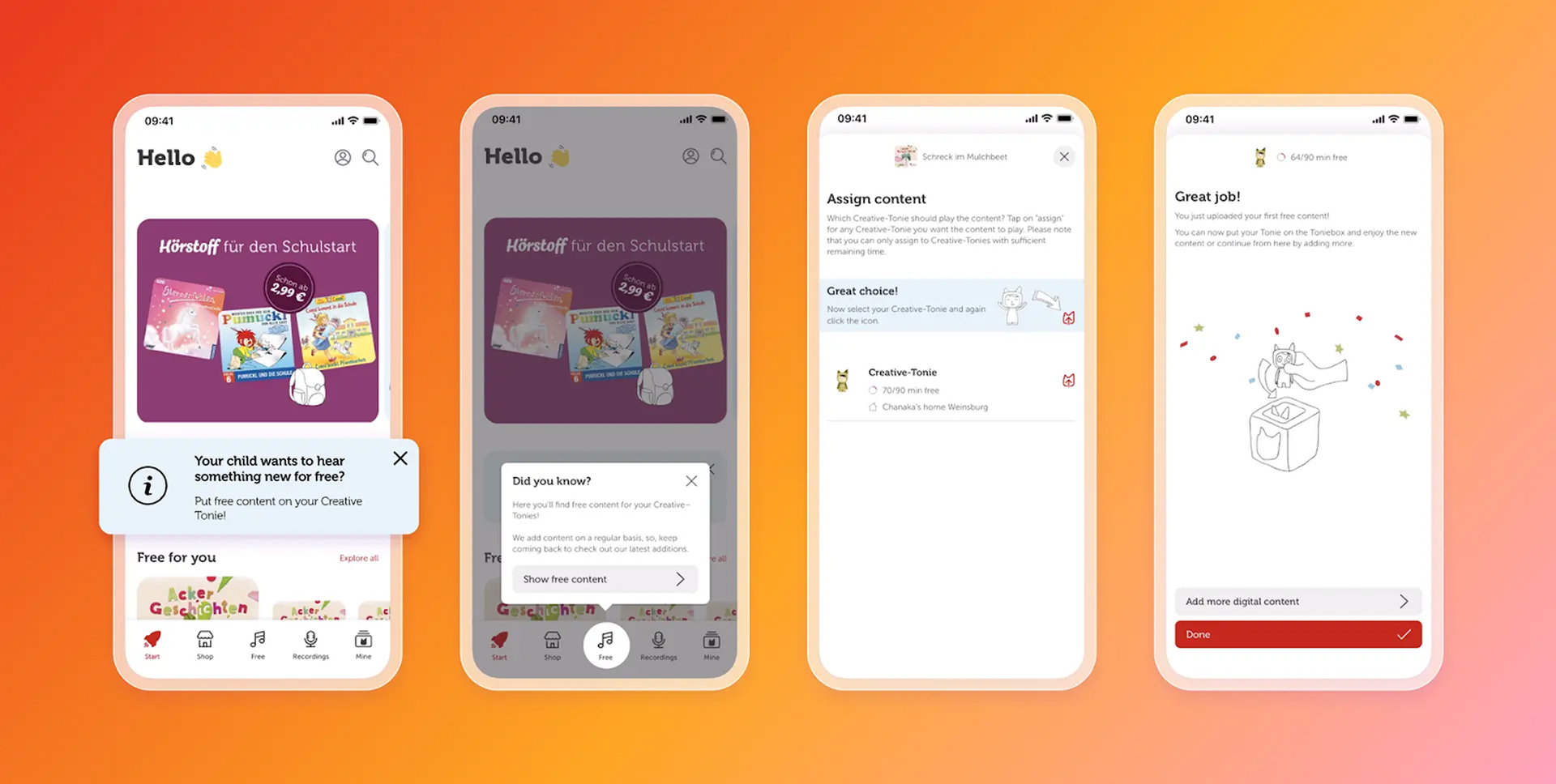

Tonies tunes customer journeys with smarter lifecycle campaigns

Tonies, the children’s audio entertainment brand, needed to drive engagement and long-term retention by guiding customers through key lifecycle moments. Using Braze, the team reimagined both their onboarding and upsell flows, turning free content into a gateway for sustained value and future purchases.

The problem

Tonies wanted to activate new users quickly and introduce them to the product’s core value, while also nudging existing users toward premium content and collectibles. Their legacy system lacked the segmentation and personalization needed to build journeys that could adapt to real-time customer behavior, leaving engagement and conversions flat.

The solution

With Braze, Tonies redesigned onboarding to highlight free content right away—a simple action that encouraged new users to return and explore more. Once users were active, personalized upsell campaigns promoted paid content based on their interests, using in-app messages, Content Cards, and push notifications to deliver timely prompts throughout the customer journey.

The results

Tonies transformed lifecycle engagement, seeing a 117% year-over-year increase in free-to-paid conversions. By connecting onboarding with feature activation flows, the brand created a sustainable path from first interaction to long-term loyalty.

A seven-step framework for running AI A/B tests responsibly

The most effective programs combine automation with oversight, so campaigns adapt quickly without losing sight of strategy. This seven-step framework helps marketers run tests that deliver meaningful results without losing control.

1. Define KPIs that matter

Choose the business outcomes that guide your experiments—not just clicks or opens, but metrics like conversion rate, customer lifetime value (CLV), retention, or average revenue per user (ARPU). These goals set the foundation for how AI agents optimize.

2. Decide what to test and create variants

Even with AI in the mix, effective experimentation starts with clarity. Marketers decide what to test—timing, channel mix, discounting, creative direction—and shape the variations that bring those ideas to life. AI can generate additional options and scale the process, but the starting point and strategy come from humans.

3. Establish guardrails

AI systems work best inside defined boundaries. Guardrails can include brand voice rules, compliance constraints, frequency caps, or limits on discounting. With these controls in place, AI optimizes freely while staying aligned with brand strategy.

4. Roll out in stages

Start small—launch tests with a small percentage of the audience (e.g., 5%) and scale gradually to 20%, then 50% as confidence grows. This “canary rollout” approach limits risk while giving AI time to learn.

5. Measure continuously

AI platforms track performance in real time, reallocating traffic to stronger variants as results shift. Marketers should review outcomes regularly, validating that experiments align with both KPIs and brand context.

6. Learn and adapt

Reinforcement learning keeps campaigns evolving as customer behavior changes. Insights from these experiments can guide strategy across the wider lifecycle, from onboarding to retention and loyalty.

7. Keep humans in the loop

Even the most advanced AI can’t make sense of customer emotions, brand nuance, or long-term positioning. Marketers remain the final decision-makers, interpreting results and steering experiments toward the right business outcomes.

How to choose the right AI-driven A/B testing tools and platform

The right platform turns testing from isolated exercises into a continuous engine for smarter engagement and a core part of your marketing strategy. Choosing carefully gives marketers confidence that experimentation is scalable, reliable, and aligned with both business goals and customer expectations.

When evaluating AI A/B testing platforms, look for capabilities that support enterprise needs:

- Real-time experimentation: Continuous testing and traffic reallocation while campaigns are live, not after the fact.

- Cross-channel reach: The ability to run tests across different channels, including email, push, SMS, in-app—not just on web or landing pages.

- Built-in guardrails: Tools that respect frequency caps, privacy compliance, and brand voice, keeping experiments safe and consistent.

- Advanced optimization methods: Support for techniques like multi-armed bandits and reinforcement learning, enabling ongoing improvement rather than static tests.

- Personalization at scale: The flexibility to move from segment-level testing to individualized experiences, informed by first-party and behavioral data.

- Actionable insights: Clear reporting that ties results to KPIs such as CLV, ARPU, and retention, helping teams connect experimentation directly to business outcomes.

The most powerful platforms bring these elements together—orchestration and AI decisioning—so that experiments flow directly into real-time customer journeys.

Final thoughts on AI A/B testing

AI A/B testing is reshaping how marketers approach experimentation. With the ability to adapt in real time, run tests at scale, and deliver personalization down to the individual, it shifts testing from a slow, manual process into an always-on driver of customer engagement.

When experimentation is embedded into live journeys through orchestration and AI decisioning, every interaction becomes an opportunity to learn and improve. With this, comes marketing that’s faster, more relevant, and more resilient.

Discover how Braze powers AI-driven testing and decisioning to help brands experiment, learn, and personalize in real time.

AI A/B testing FAQs

What is AI A/B testing?

AI A/B testing is a method of experimentation where artificial intelligence aids traditional A/B testing to design, manage, and optimize variations in real time, adapting continuously to customer behavior.

How is AI A/B testing different from traditional A/B testing?

AI A/B testing differs from traditional A/B testing by using artificial intelligence to adjust parameters, reallocate traffic dynamically, or manage multiple variables across channels while a test is still running—rather than waiting for the test to conclude

What are the benefits of AI A/B testing?

The benefits of AI A/B testing include faster analysis, higher accuracy, predictive capabilities, deeper personalization, continuous optimization, and the ability to test at scale.

What are the limitations of AI A/B testing?

The limitations of AI A/B testing include needing large quantities of high-quality data, managing privacy and compliance, and the challenge of autonomous decisions without clear explanations. Human oversight is essential.

How do you run AI A/B tests responsibly?

To run AI A/B tests or any other AI-driven experimentation responsibly, marketers should set KPIs, plan variations, add guardrails, test and roll out gradually, and review results regularly with human oversight.

What are examples of AI A/B testing in marketing?

Examples of AI A/B testing in marketing include testing onboarding flows, optimizing send times, comparing discount versus loyalty offers, and refining channel mix across email, SMS, and in-app messages.

How does Braze support AI A/B testing?

Braze goes well beyond AI A/B testing with its BrazeAI Decisioning Studio™, which uses reinforcement learning through contextual bandits, allowing AI agents to personalize decisions for each customer. This means the system not only finds what works best overall but adapts to individual preferences and context, delivering real-time 1:1 personalization at an unprecedented scale.

Releated Content

View the Blog

AI A/B testing: Smarter experiments for real-time marketing optimization

October 30, 2025

How AI capabilities in Braze scale growth for financial services

October 29, 2025

Braze named one of America’s Greenest Companies 2026 by Newsweek